Uintah and Related C-SAFE Publications

2012

J. Schmidt, M. Berzins, J. Thornock, T. Saad, J. Sutherland.

“Large Scale Parallel Solution of Incompressible Flow Problems using Uintah and hypre,” SCI Technical Report, No. UUSCI-2012-002, SCI Institute, University of Utah, 2012.

The Uintah Software framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, long-running, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids together with a novel asynchronous task-based approach with fully automated load balancing. As Uintah is often used to solve compressible, low-Mach combustion applications, it is important to have a scalable linear solver. While there are many such solvers available, the scalability of those codes varies greatly. The hypre software offers a range of solvers and pre-conditioners for different types of grids. The weak scalability of Uintah and hypre is addressed for particular examples when applied to an incompressible flow problem relevant to combustion applications. After careful software engineering to reduce start-up costs, much better than expected weak scalability is seen for up to 100K cores on NSFs Kraken architecture and up to 200K+ cores, on DOEs new Titan machine.

Keywords: uintah, csafe

J. Sutherland.

“Graph-Based Parallel Task Scheduling and Algorithm Generation for Multiphysics PDE Software,” In Proceedings of the 2012 SIAM Parallel Processing Conference, Savannah, GA, 2012.

L.T. Tran.

“Numerical Study and Improvement of the Methods in Uintah Framework: The Material Point Method and the Implicit Continuous-Fluid Eulerian Method,” Note: Advisor: Martin Berzins, School of Computing, University of Utah, December, 2012.

The Material Point Method (MPM) and the Implicit Continuous-fluid Eulerian method (ICE) have been used to simulate and solve many challenging problems in engineering applications, especially those involving large deformations in materials and multimaterial interactions. These methods were implemented within the Uintah Computational Framework (UCF) to simulate explosions, fires, and other fluids and fluid-structure interaction. For the purpose of knowing if the simulations represent the solutions of the actual mathematical models, it is important to fully understand the accuracy of these methods. At the time this research was initiated, there were hardly any error analysis being done on these two methods, though the range of their applications was impressive. This dissertation undertakes an analysis of the errors in computational properties of MPM and ICE in the context of model problems from compressible gas dynamics which are governed by the one-dimensional Euler system. The analysis for MPM includes the analysis of errors introduced when the information is projected from particles onto the grid and when the particles cross the grid cells. The analysis for ICE includes the analysis of spatial and temporal errors in the method, which can then be used to improve the method's accuracy in both space and time. The implementation of ICE in UCF, which is referred to as Production ICE, does not perform as well as many current methods for compressible flow problems governed by the one-dimensional Euler equations – which we know because the obtained numerical solutions exhibit unphysical oscillations and discrepancies in the shock speeds. By examining different choices in the implementation of ICE in this dissertation, we propose a method to eliminate the discrepancies and suppress the nonphysical oscillations in the numerical solutions of Production ICE – this improved Production ICE method (IMPICE) is extended to solve the multidimensional Euler equations. The discussion of the IMPICE method for multidimensional compressible flow problems includes the method’s detailed implementation and embedded boundary implementation. Finally, we propose a discrete adjoint-based approach to estimate the spatial and temporal errors in the numerical solutions obtained from IMPICE.

2011

C. Brownlee, V. Pegoraro, S. Shankar, P.S. McCormick, C.D. Hansen.

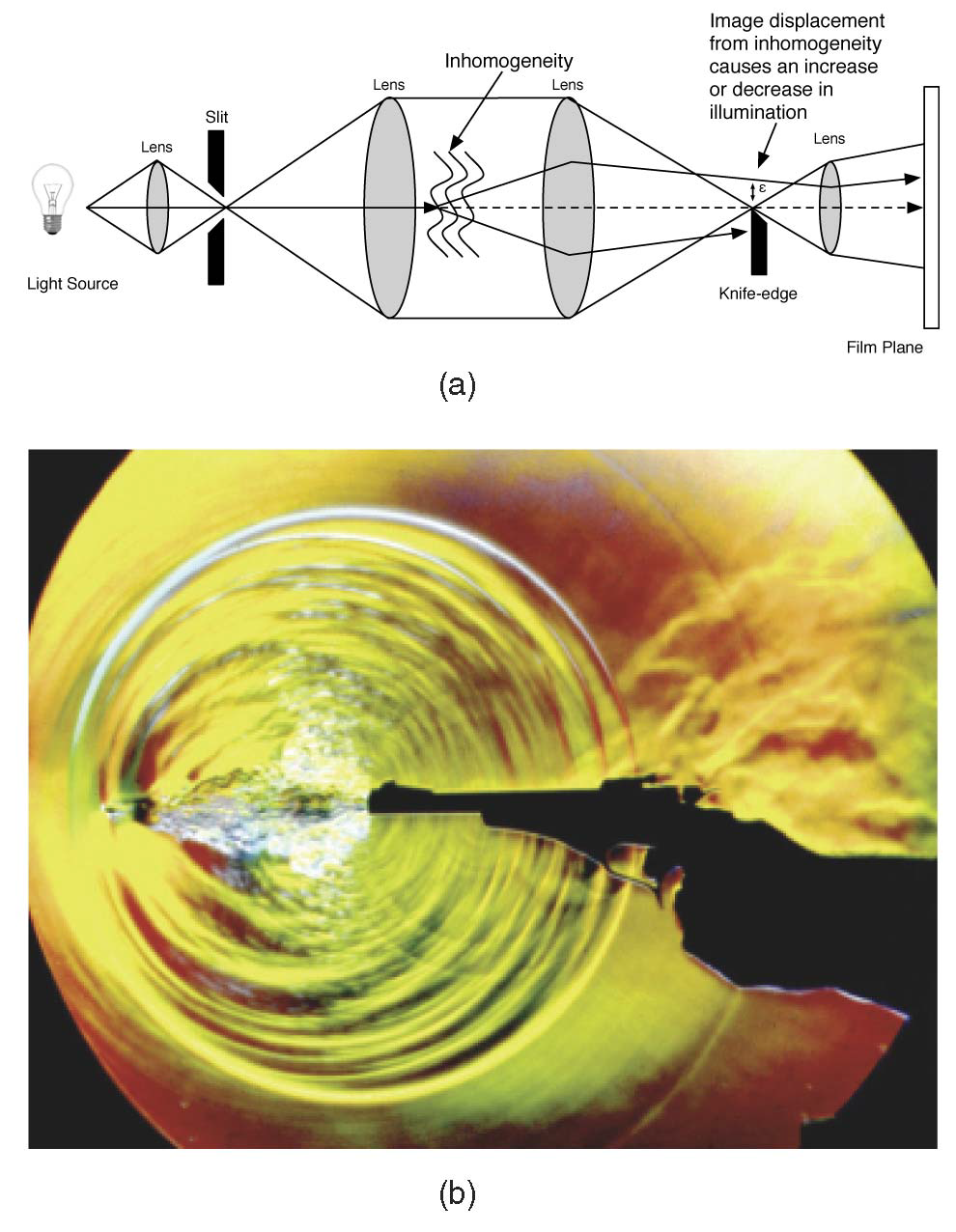

“Physically-Based Interactive Flow Visualization Based on Schlieren and Interferometry Experimental Techniques,” In IEEE Transactions on Visualization and Computer Graphics, Vol. 17, No. 11, pp. 1574--1586. 2011.

Understanding fluid flow is a difficult problem and of increasing importance as computational fluid dynamics (CFD) produces an abundance of simulation data. Experimental flow analysis has employed techniques such as shadowgraph, interferometry, and schlieren imaging for centuries, which allow empirical observation of inhomogeneous flows. Shadowgraphs provide an intuitive way of looking at small changes in flow dynamics through caustic effects while schlieren cutoffs introduce an intensity gradation for observing large scale directional changes in the flow. Interferometry tracks changes in phase-shift resulting in bands appearing. The combination of these shading effects provides an informative global analysis of overall fluid flow. Computational solutions for these methods have proven too complex until recently due to the fundamental physical interaction of light refracting through the flow field. In this paper, we introduce a novel method to simulate the refraction of light to generate synthetic shadowgraph, schlieren and interferometry images of time-varying scalar fields derived from computational fluid dynamics data. Our method computes physically accurate schlieren and shadowgraph images at interactive rates by utilizing a combination of GPGPU programming, acceleration methods, and data-dependent probabilistic schlieren cutoffs. Applications of our method to multifield data and custom application-dependent color filter creation are explored. Results comparing this method to previous schlieren approximations are finally presented.

Keywords: uintah, c-safe

I. Hunsaker, T. Harman, J. Thornock, P.J. Smith.

“Efficient Parallelization of RMCRT for Large Scale LES Combustion Simulations,” In Proceedings of the AIAA 20th Computational Fluids Dynamics Conference, 2011.

DOI: 10.2514/6.2011-3770

K. Kamojjala, R.M. Brannon.

“Verification Of Frame Indifference For Complicated Numerical Constitutive Models,” In Proceedings of the ASME Early Career Technical Conference, 2011.

The principle of material frame indifference requires spatial stresses to rotate with the material, whereas reference stresses must be insensitive to rotation. Testing of a classical uniaxial strain problem with superimposed rotation reveals that a very common approach to strong incremental objectivity taken in finite element codes to satisfy frame indifference (namely working in an approximate un-rotated frame) fails this simplistic test. A more complicated verification example is constructed based on the method of manufactured solutions (MMS) which involves the same character of loading at all points, providing a means to test any nonlinear-elastic arbitrarily anisotropic constitutive model.

J.P. Luitjens.

“The Scalability of Parallel Adaptive Mesh Refinement Within Uintah,” Note: Advisor: Martin Berzins, School of Computing, University of Utah, 2011.

Solutions to Partial Differential Equations (PDEs) are often computed by discretizing the domain into a collection of computational elements referred to as a mesh. This solution is an approximation with an error that decreases as the mesh spacing decreases. However, decreasing the mesh spacing also increases the computational requirements. Adaptive mesh refinement (AMR) attempts to reduce the error while limiting the increase in computational requirements by refining the mesh locally in regions of the domain that have large error while maintaining a coarse mesh in other portions of the domain. This approach often provides a solution that is as accurate as that obtained from a much larger fixed mesh simulation, thus saving on both computational time and memory. However, historically, these AMR operations often limit the overall scalability of the application.

Adapting the mesh at runtime necessitates scalable regridding and load balancing algorithms. This dissertation analyzes the performance bottlenecks for a widely used regridding algorithm and presents two new algorithms which exhibit ideal scalability. In addition, a scalable space-filling curve generation algorithm for dynamic load balancing is also presented. The performance of these algorithms is analyzed by determining their theoretical complexity, deriving performance models, and comparing the observed performance to those performance models. The models are then used to predict performance on larger numbers of processors. This analysis demonstrates the necessity of these algorithms at larger numbers of processors. This dissertation also investigates methods to more accurately predict workloads based on measurements taken at runtime. While the methods used are not new, the application of these methods to the load balancing process is. These methods are shown to be highly accurate and able to predict the workload within 3% error. By improving the accuracy of these estimations, the load imbalance of the simulation can be reduced, thereby increasing the overall performance.

J. Luitjens, M. Berzins.

“Scalable parallel regridding algorithms for block-structured adaptive mesh refinement,” In Concurrency and Computation: Practice and Experience, Vol. 23, No. 13, pp. 1522--1537. September, 2011.

DOI: 10.1002/cpe.1719

Block-structured adaptive mesh refinement (BSAMR) is widely used within simulation software because it improves the utilization of computing resources by refining the mesh only where necessary. For BSAMR to scale onto existing petascale and eventually exascale computers all portions of the simulation need to weak scale ideally. Any portions of the simulation that do not will become a bottleneck at larger numbers of cores. The challenge is to design algorithms that will make it possible to avoid these bottlenecks on exascale computers. One step of existing BSAMR algorithms involves determining where to create new patches of refinement. The Berger–Rigoutsos algorithm is commonly used to perform this task. This paper provides a detailed analysis of the performance of two existing parallel implementations of the Berger– Rigoutsos algorithm and develops a new parallel implementation of the Berger–Rigoutsos algorithm and a tiled algorithm that exhibits ideal scalability. The analysis and computational results up to 98 304 cores are used to design performance models which are then used to predict how these algorithms will perform on 100 M cores.

Q. Meng, M. Berzins, J. Schmidt.

“Using Hybrid Parallelism to improve memory use in Uintah,” In Proceedings of the TeraGrid 2011 Conference, Salt Lake City, Utah, ACM, July, 2011.

DOI: 10.1145/2016741.2016767

The Uintah Software framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, long-running, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids together with a novel asynchronous task-based approach with fully automated load balancing. Uintah's memory use associated with ghost cells and global meta-data has become a barrier to scalability beyond O(100K) cores. A hybrid memory approach that addresses this issue is described and evaluated. The new approach based on a combination of Pthreads and MPI is shown to greatly reduce memory usage as predicted by a simple theoretical model, with comparable CPU performance.

Keywords: Uintah, C-SAFE, parallel computing

A. Sadeghirad, R.M. Brannon, J. Burghardt.

“A Convected Particle Domain Interpolation Technique To Extend Applicability of the Material Point Method for Problems Involving Massive Deformations,” In International Journal for Numerical Methods in Engineering, Vol. 86, No. 12, pp. 1435--1456. 2011.

DOI: 10.1002/nme.3110

A new algorithm is developed to improve the accuracy and efficiency of the material point method for problems involving extremely large tensile deformations and rotations. In the proposed procedure, particle domains are convected with the material motion more accurately than in the generalized interpolation material point method. This feature is crucial to eliminate instability in extension, which is a common shortcoming of most particle methods. Also, a novel alternative set of grid basis functions is proposed for efficiently calculating nodal force and consistent mass integrals on the grid. Specifically, by taking advantage of initially parallelogram-shaped particle domains, and treating the deformation gradient as constant over the particle domain, the convected particle domain is a reshaped parallelogram in the deformed configuration. Accordingly, an alternative grid basis function over the particle domain is constructed by a standard 4-node finite element interpolation on the parallelogram. Effectiveness of the proposed modifications is demonstrated using several large deformation solid mechanics problems.

A. Sadeghirad, R.M. Brannon, J. Guilkey.

“Enriched Convected Particle Domain Interpolation (CPDI) Method for Analyzing Weak Discontinuities,” In Particles, 2011.

P.J. Smith, M. Hradisky, J. Thornock, J. Spinti, D. Nguyen.

“Large eddy simulation of a turbulent buoyant helium plume,” In Proceedings of Supercomputing 2011 Companion, pp. 135--136. 2011.

DOI: 10.1145/2148600.2148671

At the Institute for Clean and Secure Energy at the University of Utah we are focused on education through interdisciplinary research on high-temperature fuel-utilization processes for energy generation, and the associated health, environmental, policy and performance issues. We also work closely with the government agencies and private industry companies to promote rapid deployment of new technologies through the use of high performance computational tools.

Buoyant flows are encountered in many situations of engineering and environmental importance, including fires, subsea and atmospheric exhaust phenomena, gas releases and geothermal events. Buoyancy-driven flows also play a key role in such physical processes as the spread of smoke or toxic gases from fires. As such, buoyant flow experiments are an important step in developing and validating simulation tools for numerical techniques such as Large Eddy Simulation (LES) for predictive use of complex systems. Large Eddy Simulation is a turbulence model that provides a much greater degree of resolution of physical scales than the more common Reynolds-Averaged Navier Stokes models. The validation activity requires increasing levels of complexity to sequentially quantify the effects of coupling increased physics, and to explore the effects of scale on the objectives of the simulation.

In this project we are using buoyant flows to examine the validity and accuracy of numerical techniques. By using the non-reacting buoyant helium plume flow we can study the generation of turbulence due to buoyancy, uncoupled from the complexities of combustion chemistry.

We are performing Large Eddy Simulation of a one-meter diameter buoyancy-driven helium plume using two software simulation tools -- ARCHES and Star-CCM+. ARCHES is a finite-volume Large Eddy Simulation code built within the Uintah framework, which is a set of software components and libraries that facilitate the solution of partial differential equations on structured adaptive mesh refinement grids using thousands of processors. Uintah is the product of a ten-year partnership with the Department of Energy's Advanced Simulation and Computing (ASC) program through the University of Utah's Center for Simulation of Accidental Fires and Explosions (C-SAFE). The ARCHES component was initially designed for predicting the heat-flux from large buoyant pool fires with potential hazards immersed in or near a pool fire of transportation fuel. Since then, this component has been extended to solve many industrially relevant problems such as industrial flares, oxy-coal combustion processes, and fuel gasification.

The second simulation tool, Star-CCM+, is a commercial, integrated software environment developed by CD-adapco, that can be used to simulate the entire engineering simulation process. The engineering process can be started with CAD preparation, meshing, model setup, and continued with running simulations, post-processing, and visualizing the results. This allows for faster development and design turn-over time, especially for industry-type application. Star-CCM+ was build from ground up to provide scalable parallel performance. Furthermore, it is not only supported on the industry-standard Linux HPC platforms, but also on Windows HPC, allowing us to explore computational demands on both Linux as well as Windows-based HPC clusters.

P.J. Smith, J. Thornock, J., D. Hinckley, M. Hradisky.

“Large Eddy Simulation Of Industrial Flares,” In Proceedings of Supercomputing 2011 Companion, pp. 137--138. 2011.

DOI: 10.1145/2148600.2148672

At the Institute for Clean and Secure Energy at the University of Utah we are focused on education through interdisciplinary research on high-temperature fuel-utilization processes for energy generation, and the associated health, environmental, policy and performance issues. We also work closely with the government agencies and private industry companies to promote rapid deployment of new technologies through the use of high performance computational tools.

Industrial flare simulation can provide important information on combustion efficiency, pollutant emissions, and operational parameter sensitivities for design or operation that cannot be measured. These simulations provide information that may help design or operate flares so as to reduce or eliminate harmful pollutants and increase combustion efficiency.

Fires and flares have been particularly difficult to simulate with traditional computational fluid dynamics (CFD) simulation tools that are based on Reynolds-Averaged Navier-Stokes (RANS) approaches. The large-scale mixing due to vortical coherent structures in these flames is not readily reduced to steady-state CFD calculations with RANS.

Simulation of combustion using Large Eddy Simulations (LES) has made it possible to more accurately simulate the complex combustion seen in these flares. Resolution of all length and time scales is not possible even for the largest supercomputers. LES gives a numerical technique which resolves the large length and time scales while using models for more homogenous smaller scales. By using LES, the combustion dynamics capture the puffing created by buoyancy in industrial flare simulation.

All of our simulations were performed using either the University of Utah's ARCHES simulation tool or the commercially available Star-CCM+ software. ARCHES is a finite-volume Large Eddy Simulation code built within the Uintah framework, which is a set of software components and libraries that facilitate the solution of partial differential equations on structured adaptive mesh refinement grids using thousands of processors. Uintah is the product of a ten-year partnership with the Department of Energy's Advanced Simulation and Computing (ASC) program through the University of Utah's Center for Simulation of Accidental Fires and Explosions (C-SAFE). The ARCHES component was initially designed for predicting the heat-flux from large buoyant pool fires with potential hazards immersed in or near a pool fire of transportation fuel. Since then, this component has been extended to solve many industrially relevant problems such as industrial flares, oxy-coal combustion processes, and fuel gasification.

In this report we showcase selected results that help us visualize and understand the physical processes occurring in the simulated systems.

Most of the simulations were completed on the University of Utah's Updraft and Ember high performance computing clusters, which are managed by the Center for High Performance Computing. High performance computational tools are essential in our effort to successfully answer all aspects of our research areas and we promote the use of high performance computational tools beyond the research environment by directly working with our industry partners.

J. Sutherland, T. Saad.

“The Discrete Operator Approach to the Numerical Solution of Partial Differential Equations,” In Proceedings of the 20th AIAA Computational Fluid Dynamics Conference, Honolulu, Hawaii, pp. AIAA-2011-3377. 2011.

DOI: 10.2514/6.2011-3377

J. Sutherland, T. Saad.

“A Novel Computational Framework for Reactive Flow and Multiphysics Simulations,” Note: AIChE Annual Meeting, 2011.

L.T. Tran, M. Berzins.

“IMPICE Method for Compressible Flow Problems in Uintah,” In International Journal For Numerical Methods In Fluids, Note: Published online 20 July, 2011.

2010

M. Berzins, J. Luitjens, Q. Meng, T. Harman, C.A. Wight, J.R. Peterson.

“Uintah: A Scalable Framework for Hazard Analysis,” In Proceedings of the Teragrid 2010 Conference, TG 10, Note: Awarded Best Paper in the Science Track!, pp. (published online). July, 2010.

ISBN: 978-1-60558-818-6

DOI: 10.1145/1838574.1838577

The Uintah Software system was developed to provide an environment for solving a fluid-structure interaction problems on structured adaptive grids on large-scale, long-running, data-intensive problems. Uintah uses a novel asynchronous task-based approach with fully automated load balancing. The application of Uintah to a petascale problem in hazard analysis arising from "sympathetic" explosions in which the collective interactions of a large ensemble of explosives results in dramatically increased explosion violence, is considered. The advances in scalability and combustion modeling needed to begin to solve this problem are discussed and illustrated by prototypical computational results.

Keywords: Uintah, csafe

R.M. Brannon, S. Leelavanichkul.

“A Multi-Stage Return Algorithm for Solving the Classical Damage Component of Constitutive Models for Rocks, Ceramics, and Other Rock-Like Media,” In International Journal of Fracture, Vol. 163, No. 1-2, pp. 133--149. 2010.

DOI: 10.1007/s10704-009-9398-4

Classical plasticity and damage models for porous quasi-brittle media usually suffer from mathematical defects such as non-convergence and non-uniqueness. Yield or damage functions for porous quasi-brittle media often have yield functions with contours so distorted that following those contours to the yield surface in a return algorithm can take the solution to a false elastic domain. A steepest-descent return algorithm must include iterative corrections; otherwise, the solution is non-unique because contours of any yield function are non-unique. A multi-stage algorithm has been developed to address both spurious convergence and non-uniqueness, as well as to improve efficiency. The region of pathological isosurfaces is masked by first returning the stress state to the Drucker–Prager surface circumscribing the actual yield surface. From there, steepest-descent is used to locate a point on the yield surface. This first-stage solution, which is extremely efficient because it is applied in a 2D subspace, is generally not the correct solution, but it is used to estimate the correct return direction. The first-stage solution is projected onto the estimated correct return direction in 6D stress space. Third invariant dependence and anisotropy are accommodated in this second-stage correction. The projection operation introduces errors associated with yield surface curvature, so the two-stage iteration is applied repeatedly to converge. Regions of extremely high curvature are detected and handled separately using an approximation to vertex theory. The multi-stage return is applied holding internal variables constant to produce a non-hardening solution. To account for hardening from pore collapse (or softening from damage), geometrical arguments are used to clearly illustrate the appropriate scaling of the non-hardening solution needed to obtain the hardening (or softening) solution.

J.A. Burghardt, B. Leavy, J. Guilkey, Z. Xue, R.M. Brannon.

“Application of Uintah-MPM to Shaped Charge Jet Penetration of Aluminum,” In IOP Conference Series: Materials Science and Engineering, Vol. 10, No. 1, pp. 012223. 2010.

The capability of the generalized interpolation material point (GIMP) method in simulation of penetration events is investigated. A series of experiments was performed wherein a shaped charge jet penetrates into a stack of aluminum plates. Electronic switches were used to measure the penetration time history. Flash x-ray techniques were used to measure the density, length, radius and velocity of the shaped charge jet. Simulations of the penetration event were performed using the Uintah MPM/GIMP code with several different models of the shaped charge jet being used. The predicted penetration time history for each jet model is compared with the experimentally observed penetration history. It was found that the characteristics of the predicted penetration were dependent on the way that the jet data are translated to a discrete description. The discrete jet descriptions were modified such that the predicted penetration histories fell very close to the range of the experimental data. In comparing the various discrete jet descriptions it was found that the cumulative kinetic energy flux curve represents an important way of characterizing the penetration characteristics of the jet. The GIMP method was found to be well suited for simulation of high rate penetration events.

B. Leavy, R.M. Brannon, O.E. Strack.

“The Use of Sphere Indentation Experiments to Characterize Ceramic Damage Models,” In International Journal of Applied Ceramic Technology, Vol. 7, No. 5, pp. 606--615. September/October, 2010.

DOI: 10.1111/j.1744-7402.2010.02487.x

Sphere impact experiments are used to calibrate and validate ceramic models that include statistical variability and/or scale effects in strength and toughness parameters. These dynamic experiments supplement traditional characterization experiments such as tension, triaxial compression, Brazilian, and plate impact, which are commonly used for ceramic model calibration. The fractured ceramic specimens are analyzed using sectioning, X-ray computed tomography, microscopy, and other techniques. These experimental observations indicate that a predictive material model must incorporate a standard deviation in strength that varies with the nature of the loading. Methods of using the spherical indentation data to calibrate a statistical damage model are presented in which it is assumed that variability in strength is tied to microscale stress concentrations associated with microscale heterogeneity.

Page 4 of 14