Uintah and Related C-SAFE Publications

2013

A. Biglari, T. Saad, J. Sutherland.

“A Time-Accurate Pressure Projection Method for Reacting Flows,” In Proceedings of the SIAM 14th International Conference on Numerical Combustion (NC13), San Antonio, TX, 2013.

M. Hall, J.C. Beckvermit, C.A. Wight, T. Harman, M. Berzins.

“The influence of an applied heat flux on the violence of reaction of an explosive device,” In Proceedings of the Conference on Extreme Science and Engineering Discovery Environment: Gateway to Discovery, San Diego, California, XSEDE '13, pp. 11:1--11:8. 2013.

ISBN: 978-1-4503-2170-9

DOI: 10.1145/2484762.2484786

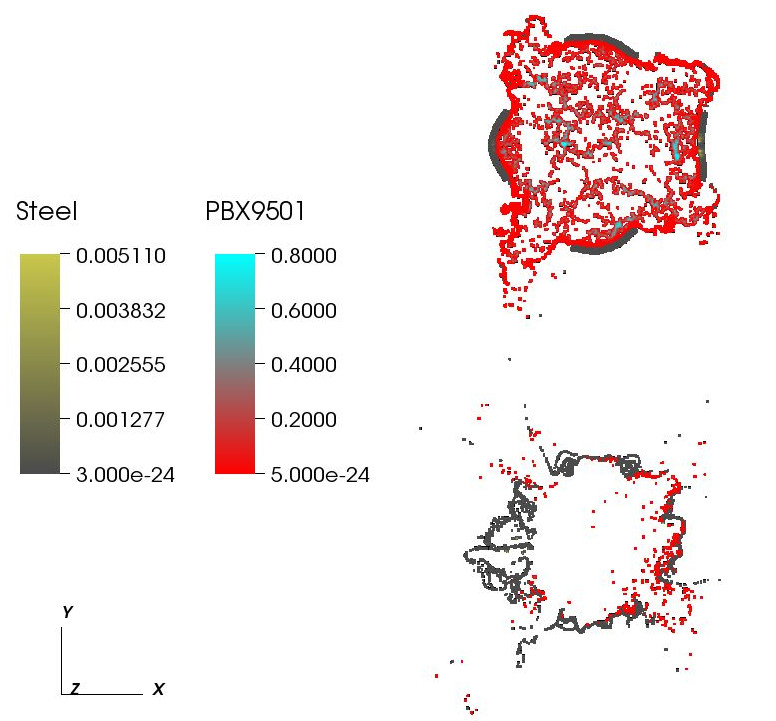

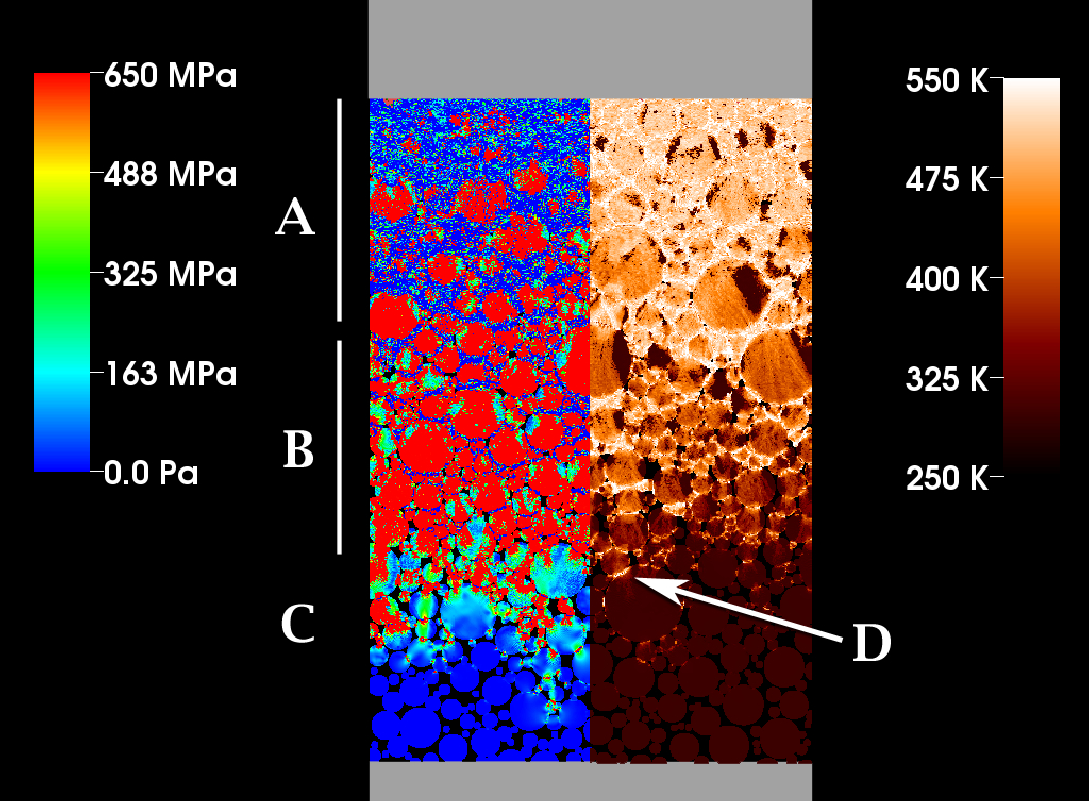

It is well known that the violence of slow cook-off explosions can greatly exceed the comparatively mild case burst events typically observed for rapid heating. However, there have been few studies that examine the reaction violence as a function of applied heat flux that explore the dependence on heating geometry and device size. Here we report progress on a study using the Uintah Computation Framework, a high-performance computer model capable of modeling deflagration, material damage, deflagration to detonation transition and detonation for PBX9501 and similar explosives. Our results suggests the existence of a sharp threshold for increased reaction violence with decreasing heat flux. The critical heat flux was seen to increase with increasing device size and decrease with the heating of multiple surfaces, suggesting that the temperature gradient in the heated energetic material plays an important role the violence of reactions.

Keywords: DDT, cook-off, deflagration, detonation, violence of reaction, c-safe

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Preliminary Experiences with the Uintah Framework on Intel Xeon Phi and Stampede,” SCI Technical Report, No. UUSCI-2013-002, SCI Institute, University of Utah, 2013.

In this work, we describe our preliminary experiences on the Stampede system in the context of the Uintah Computational Framework. Uintah was developed to provide an environment for solving a broad class of fluid-structure interaction problems on structured adaptive grids. Uintah uses a combination of fluid-flow solvers and particle-based methods, together with a novel asynchronous taskbased approach and fully automated load balancing. While we have designed scalable Uintah runtime systems for large CPU core counts, the emergence of heterogeneous systems presents considerable challenges in terms of effectively utilizing additional on-node accelerators and co-processors, deep memory hierarchies, as well as managing multiple levels of parallelism. Our recent work has addressed the emergence of heterogeneous CPU/GPU systems with the design of a Unified heterogeneous runtime system, enabling Uintah to fully exploit these architectures with support for asynchronous, out-of-order scheduling of both CPU and GPU computational tasks. Using this design, Uintah has run at full scale on the Keeneland System and TitanDev. With the release of the Intel Xeon Phi co-processor and the recent availability of the Stampede system, we show that Uintah may be modified to utilize such a coprocessor based system. We also explore the different usage models provided by the Xeon Phi with the aim of understanding portability of a general purpose framework like Uintah to this architecture. These usage models range from the pragma based offload model to the more complex symmetric model, utilizing all co-processor and host CPU cores simultaneously. We provide preliminary results of the various usage models for a challenging adaptive mesh refinement problem, as well as a detailed account of our experience adapting Uintah to run on the Stampede system. Our conclusion is that while the Stampede system is easy to use, obtaining high performance from the Xeon Phi co-processors requires a substantial but different investment to that needed for GPU-based systems.

Keywords: Uintah, hybrid parallelism, scalability, parallel, adaptive, MIC, Xeon Phi, heterogeneous systems, Stampede, co-processor

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Investigating Applications Portability with the Uintah DAG-based Runtime System on PetaScale Supercomputers,” SCI Technical Report, No. UUSCI-2013-003, SCI Institute, University of Utah, 2013.

Present trends in high performance computing present formidable challenges for applications code using multicore nodes possibly with accelerators and/or co-processors and reduced memory while still attaining scalability. Software frameworks that execute machineindependent applications code using a runtime system that shields users from architectural complexities offer a possible solution. The Uintah framework for example, solves a broad class of large-scale problems on structured adaptive grids using fluid-flow solvers coupled with particle-based solids methods. Uintah executes directed acyclic graphs of computational tasks with a scalable asynchronous and dynamic runtime system for CPU cores and/or accelerators/coprocessors on a node. Uintah's clear separation between application and runtime code has led to scalability increases of 1000x without significant changes to application code. This methodology is tested on three leading Top500 machines; OLCF Titan, TACC Stampede and ALCF Mira using three diverse and challenging applications problems. This investigation of scalability with regard to the different processors and communications performance leads to the overall conclusion that the adaptive DAG-based approach provides a very powerful abstraction for solving challenging multiscale multi-physics engineering problems on some of the largest and most powerful computers available today.

Keywords: Uintah, hybrid parallelism, scalability, parallel, adaptive, MIC, Xeon Phi, heterogeneous systems, Stampede, co-processor

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Investigating Applications Portability with the Uintah DAG-based Runtime System on PetaScale Supercomputers,” In Proceedings of SC13: International Conference for High Performance Computing, Networking, Storage and Analysis, pp. 96:1--96:12. 2013.

ISBN: 978-1-4503-2378-9

DOI: 10.1145/2503210.2503250

Present trends in high performance computing present formidable challenges for applications code using multicore nodes possibly with accelerators and/or co-processors and reduced memory while still attaining scalability. Software frameworks that execute machine-independent applications code using a runtime system that shields users from architectural complexities offer a possible solution. The Uintah framework for example, solves a broad class of large-scale problems on structured adaptive grids using fluid-flow solvers coupled with particle-based solids methods. Uintah executes directed acyclic graphs of computational tasks with a scalable asynchronous and dynamic runtime system for CPU cores and/or accelerators/co-processors on a node. Uintah's clear separation between application and runtime code has led to scalability increases of 1000x without significant changes to application code. This methodology is tested on three leading Top500 machines; OLCF Titan, TACC Stampede and ALCF Mira using three diverse and challenging applications problems. This investigation of scalability with regard to the different processors and communications performance leads to the overall conclusion that the adaptive DAG-based approach provides a very powerful abstraction for solving challenging multi-scale multi-physics engineering problems on some of the largest and most powerful computers available today.

Keywords: Blue Gene/Q, GPU, Xeon Phi, adaptive, application, co-processor, heterogeneous systems, hybrid parallelism, parallel, scalability, software, uintah, NETL

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Preliminary Experiences with the Uintah Framework on Intel Xeon Phi and Stampede,” In Proceedings of the Conference on Extreme Science and Engineering Discovery Environment: Gateway to Discovery (XSEDE 2013), San Diego, California, pp. 48:1--48:8. 2013.

DOI: 10.1145/2484762.2484779

In this work, we describe our preliminary experiences on the Stampede system in the context of the Uintah Computational Framework. Uintah was developed to provide an environment for solving a broad class of fluid-structure interaction problems on structured adaptive grids. Uintah uses a combination of fluid-flow solvers and particle-based methods, together with a novel asynchronous task-based approach and fully automated load balancing. While we have designed scalable Uintah runtime systems for large CPU core counts, the emergence of heterogeneous systems presents considerable challenges in terms of effectively utilizing additional on-node accelerators and co-processors, deep memory hierarchies, as well as managing multiple levels of parallelism. Our recent work has addressed the emergence of heterogeneous CPU/GPU systems with the design of a Unified heterogeneous runtime system, enabling Uintah to fully exploit these architectures with support for asynchronous, out-of-order scheduling of both CPU and GPU computational tasks. Using this design, Uintah has run at full scale on the Keeneland System and TitanDev. With the release of the Intel Xeon Phi co-processor and the recent availability of the Stampede system, we show that Uintah may be modified to utilize such a co-processor based system. We also explore the different usage models provided by the Xeon Phi with the aim of understanding portability of a general purpose framework like Uintah to this architecture. These usage models range from the pragma based offload model to the more complex symmetric model, utilizing all co-processor and host CPU cores simultaneously. We provide preliminary results of the various usage models for a challenging adaptive mesh refinement problem, as well as a detailed account of our experience adapting Uintah to run on the Stampede system. Our conclusion is that while the Stampede system is easy to use, obtaining high performance from the Xeon Phi co-processors requires a substantial but different investment to that needed for GPU-based systems.

Keywords: MIC, Xeon Phi, adaptive, co-processor, heterogeneous systems, hybrid parallelism, parallel, scalability, stampede, uintah, c-safe

D.C.B. de Oliveira, Z. Rakamaric, G. Gopalakrishnan, A. Humphrey, Q. Meng, M. Berzins.

“Crash Early, Crash Often, Explain Well: Practical Formal Correctness Checking of Million-core Problem Solving Environments for HPC,” In Proceedings of the 35th International Conference on Software Engineering (ICSE 2013), pp. (accepted). 2013.

While formal correctness checking methods have been deployed at scale in a number of important practical domains, we believe that such an experiment has yet to occur in the domain of high performance computing at the scale of a million CPU cores. This paper presents preliminary results from the Uintah Runtime Verification (URV) project that has been launched with this objective. Uintah is an asynchronous task-graph based problem-solving environment that has shown promising results on problems as diverse as fluid-structure interaction and turbulent combustion at well over 200K cores to date. Uintah has been tested on leading platforms such as Kraken, Keenland, and Titan consisting of multicore CPUs and GPUs, incorporates several innovative design features, and is following a roadmap for development well into the million core regime. The main results from the URV project to date are crystallized in two observations: (1) A diverse array of well-known ideas from lightweight formal methods and testing/observing HPC systems at scale have an excellent chance of succeeding. The real challenges are in finding out exactly which combinations of ideas to deploy, and where. (2) Large-scale problem solving environments for HPC must be designed such that they can be \"crashed early\" (at smaller scales of deployment) and \"crashed often\" (have effective ways of input generation and schedule perturbation that cause vulnerabilities to be attacked with higher probability). Furthermore, following each crash, one must \"explain well\" (given the extremely obscure ways in which an error finally manifests itself, we must develop ways to record information leading up to the crash in informative ways, to minimize offsite debugging burden). Our plans to achieve these goals and to measure our success are described. We also highlight some of the broadly applicable concepts and approaches.

Keywords: Uintah

J.R. Peterson, C.A. Wight, M. Berzins.

“Applying high-performance computing to petascale explosive simulations,” In Procedia Computer Science, 2013.

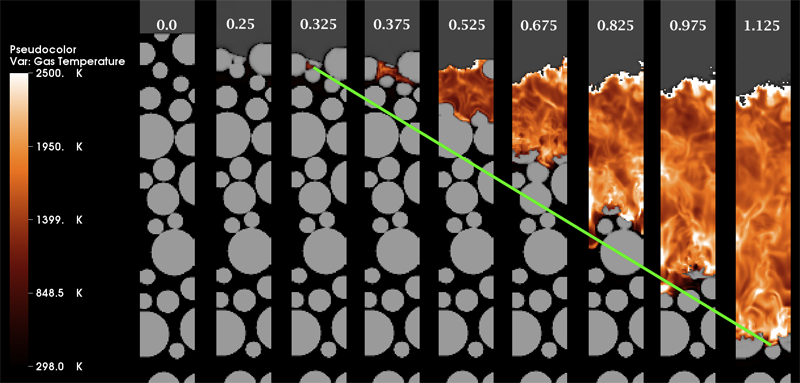

Hazardous scenarios involving explosives are difficult to experimentally study and simulation is often the only viable approach to study highly reactive phenomena. Explosive simulations are computationally expensive, requiring supercomputing resources for continued scientific discovery in the field. Here an idealized mesoscale simulation of explosive grains under mechanical insult by a high-speed projectile with reaction represented by a novel kinetic model is designed to test the scalability of the Uintah software on petascale supercomputers. Good scalability is found up to 49K processors. Timing breakdown of computational tasks are determined with relocation of Lagrangian particles and interpolation of those particles to the grid identified as the most expensive operation and ideal for optimization. Potential optimization strategies are identified. Realistic model simulations rather than toy model simulations are found to better represent scalability of a science code on a supercomputer. Estimations for total supercomputer hours necessary to complete the kinetic model validation study are reported.

Keywords: Energetic Material Hazards, Uintah, MPM, ICE, MPMICE, Scalable Parallelism, C-SAFE

J. Schmidt, M. Berzins, J. Thornock, T. Saad, J. Sutherland.

“Large Scale Parallel Solution of Incompressible Flow Problems using Uintah and hypre,” In 2013 13th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), pp. 458--465. 2013.

The Uintah Software framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, longrunning, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids together with a novel asynchronous task-based approach with fully automated load balancing. As Uintah is often used to solve incompressible flow problems in combustion applications it is important to have a scalable linear solver. While there are many such solvers available, the scalability of those codes varies greatly. The hypre software offers a range of solvers and preconditioners for different types of grids. The weak scalability of Uintah and hypre is addressed for particular examples of both packages when applied to a number of incompressible flow problems. After careful software engineering to reduce startup costs, much better than expected weak scalability is seen for up to 100K cores on NSFs Kraken architecture and up to 260K cpu cores, on DOEs new Titan machine. The scalability is found to depend in a crtitical way on the choice of algorithm used by hypre for a realistic application problem.

Keywords: Uintah, hypre, parallelism, scalability, linear equations

2012

M. Berzins.

“Status of Release of the Uintah Computational Framework,” SCI Technical Report, No. UUSCI-2012-001, SCI Institute, University of Utah, 2012.

This report provides a summary of the status of the Uintah Computation Framework (UCF) software. Uintah is uniquely equipped to tackle large-scale multi-physics science and engineering problems on disparate length and time scales. The Uintah framework makes it possible to run adaptive computations on modern HPC architectures with tens and now hundreds of thousands of cores with complex communication/memory hierarchies. Uintah was orignally developed in the University of Utah Center for Simulation of Accidental Fires and Explosions (C-SAFE), a DOE-funded academic alliance project and then extended to the broader NSF snd DOE science and engineering communities. As Uintah is applicable to a wide range of engineering problems that involve fl uid-structure interactions with highly deformable structures it is used for a number of NSF-funded and DOE engineering projects. In this report the Uintah framework software is outlined and typical applications are illustrated. Uintah is open-source software that is available through the MIT open-source license at http://www.uintah.utah.edu/.

M. Berzins, Q. Meng, J. Schmidt, J.C. Sutherland.

“DAG-Based Software Frameworks for PDEs,” In Proceedings of Euro-Par 2011 Workshops, Part I, Lecture Notes in Computer Science (LNCS) 7155, Springer-Verlag Berlin Heidelberg, pp. 324--333. August, 2012.

The task-based approach to software and parallelism is well-known and has been proposed as a potential candidate, named the silver model, for exascale software. This approach is not yet widely used in the large-scale multi-core parallel computing of complex systems of partial differential equations. After surveying task-based approaches we investigate how well the Uintah software and an extension named Wasatch fit in the task-based paradigm and how well they perform on large scale parallel computers. The conclusion is that these approaches show great promise for petascale but that considerable algorithmic challenges remain.

Keywords: DOD, Uintah, CSAFE

J.A. Burghardt, R.M. Brannon.

“A Nonlocal Plasticity Formulation for the Material Point Method,” In Computer Methods in Applied Mechanics and Engineering, Vol. 225--228, pp. 55--64. 2012.

DOI: 10.1016/j.cma.2012.03.007

A new multi-variate fixed-point iteration scheme is devised for solving the coupled dynamic integral equations governing nonlocal plasticity using the material point method (MPM). Novel use of the MPM grid for particle–particle communications results in a simple and efficient, matrix-free method. Moreover, a straightforward method for deriving a convergence criterion for this method is developed and applied to two classical verification problems that are well known to be mesh dependent with a local model, but are shown to be mesh-independent with the new nonlocal MPM formulation.

A. Humphrey, Q. Meng, M. Berzins, T. Harman.

“Radiation Modeling Using the Uintah Heterogeneous CPU/GPU Runtime System,” SCI Technical Report, No. UUSCI-2012-003, SCI Institute, University of Utah, 2012.

The Uintah Computational Framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, long-running, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids, together with a novel asynchronous task-based approach with fully automated load balancing. Uintah demonstrates excellent weak and strong scalability at full machine capacity on XSEDE resources such as Ranger and Kraken, and through the use of a hybrid memory approach based on a combination of MPI and Pthreads, Uintah now runs on up to 262k cores on the DOE Jaguar system. In order to extend Uintah to heterogeneous systems, with ever-increasing CPU core counts and additional onnode GPUs, a new dynamic CPU-GPU task scheduler is designed and evaluated in this study. This new scheduler enables Uintah to fully exploit these architectures with support for asynchronous, outof- order scheduling of both CPU and GPU computational tasks. A new runtime system has also been implemented with an added multi-stage queuing architecture for efficient scheduling of CPU and GPU tasks. This new runtime system automatically handles the details of asynchronous memory copies to and from the GPU and introduces a novel method of pre-fetching and preparing GPU memory prior to GPU task execution. In this study this new design is examined in the context of a developing, hierarchical GPUbased ray tracing radiation transport model that provides Uintah with additional capabilities for heat transfer and electromagnetic wave propagation. The capabilities of this new scheduler design are tested by running at large scale on the modern heterogeneous systems, Keeneland and TitanDev, with up to 360 and 960 GPUs respectively. On these systems, we demonstrate significant speedups per GPU against a standard CPU core for our radiation problem.

Keywords: csafe, uintah

A. Humphrey, Q. Meng, M. Berzins, T. Harman.

“Radiation Modeling Using the Uintah Heterogeneous CPU/GPU Runtime System,” In Proceedings of the first conference of the Extreme Science and Engineering Discovery Environment (XSEDE'12), Association for Computing Machinery, 2012.

DOI: 10.1145/2335755.2335791

The Uintah Computational Framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, long-running, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids, together with a novel asynchronous task-based approach with fully automated load balancing. Uintah demonstrates excellent weak and strong scalability at full machine capacity on XSEDE resources such as Ranger and Kraken, and through the use of a hybrid memory approach based on a combination of MPI and Pthreads, Uintah now runs on up to 262k cores on the DOE Jaguar system. In order to extend Uintah to heterogeneous systems, with ever-increasing CPU core counts and additional onnode GPUs, a new dynamic CPU-GPU task scheduler is designed and evaluated in this study. This new scheduler enables Uintah to fully exploit these architectures with support for asynchronous, outof- order scheduling of both CPU and GPU computational tasks. A new runtime system has also been implemented with an added multi-stage queuing architecture for efficient scheduling of CPU and GPU tasks. This new runtime system automatically handles the details of asynchronous memory copies to and from the GPU and introduces a novel method of pre-fetching and preparing GPU memory prior to GPU task execution. In this study this new design is examined in the context of a developing, hierarchical GPUbased ray tracing radiation transport model that provides Uintah with additional capabilities for heat transfer and electromagnetic wave propagation. The capabilities of this new scheduler design are tested by running at large scale on the modern heterogeneous systems, Keeneland and TitanDev, with up to 360 and 960 GPUs respectively. On these systems, we demonstrate significant speedups per GPU against a standard CPU core for our radiation problem.

Keywords: Uintah, hybrid parallelism, scalability, parallel, adaptive, GPU, heterogeneous systems, Keeneland, TitanDev

B. Leavy, J. Clayton, O.E. Strack, R.M. Brannon, E. Strassburger.

“Edge on Impact Simulations and Experiments,” In Proceedings of the 12th Hypervelocity Impact Symposium, 2012.

In the quest to understand damage and failure of ceramics in ballistic events, simplified experiments have been developed to benchmark behavior. One such experiment is known as edge on impact (EOI). In this experiment, an impactor strikes the edge of a thin square plate, and damage and cracking that occur on the free surface are captured in real time with high speed photography. If the material of interest is transparent, additional information regarding damage and wave mechanics within the sample can be discerned. Polarizers can be used to monitor stress wave propagation, and photography can record internal damage. This information serves as an excellent benchmark for validation of ceramic and glass constitutive models implemented in dynamic simulation codes. In this report, recent progress towards predictive modeling of EOI is discussed. Time-dependent crack propagation and damage front evolution in silicon carbide and aluminum oxynitride ceramics are predicted using the Kayenta macroscopic constitutive model. Aspects regarding modeling material failure, variability, and volume scaling are noted. Mesoscale simulations of dynamic failure of anisotropic ceramic crystals facilitate determination of limit surfaces entering the macroscopic constitutive model, offsetting limited available experimental data.

Q. Meng, M. Berzins.

“Scalable Large-scale Fluid-structure Interaction Solvers in the Uintah Framework via Hybrid Task-based Parallelism Algorithms,” SCI Technical Report, No. UUSCI-2012-004, SCI Institute, University of Utah, 2012.

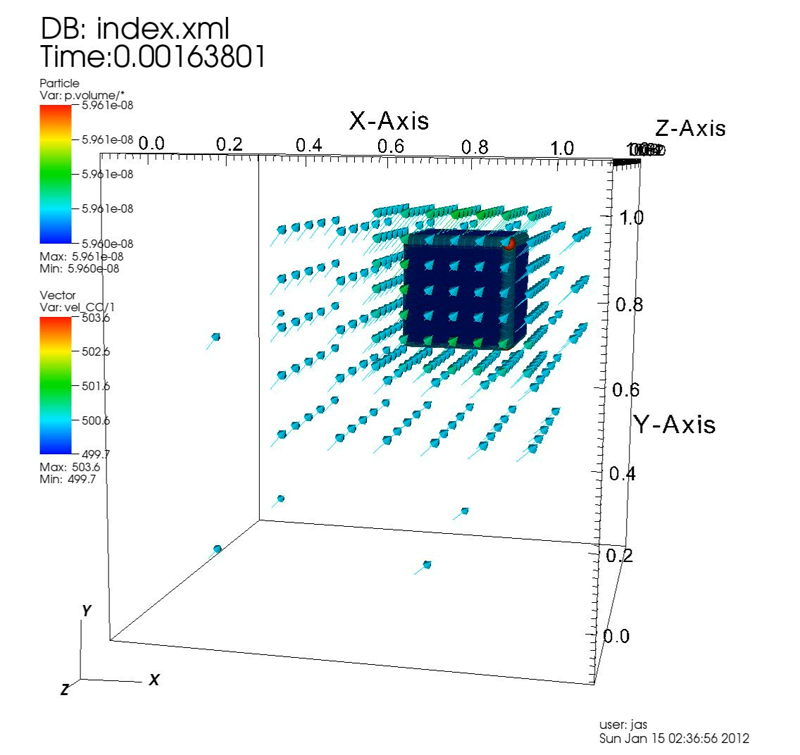

Uintah is a software framework that provides an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale science and engineering problems involving the solution of partial differential equations. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids, together with adaptive meshing and a novel asynchronous task-based approach with fully automated load balancing. When applying Uintah to fluid-structure interaction problems with mesh refinement, the combination of adaptive meshing and the movement of structures through space present a formidable challenge in terms of achieving scalability on large-scale parallel computers. With core counts per socket continuing to grow along with the prospect of less memory per core, adopting a model that uses MPI to communicate between nodes and a shared memory model on-node is one approach to achieve scalability at full machine capacity on current and emerging large-scale systems. For this approach to be successful, it is necessary to design data-structures that large numbers of cores can simultaneously access without contention. These data structures and algorithms must also be designed to avoid the overhead involved with locks and other synchronization primitives when running on large number of cores per node, as contention for acquiring locks quickly becomes untenable. This scalability challenge is addressed here for Uintah, by the development of new hybrid runtime and scheduling algorithms combined with novel lockfree data structures, making it possible for Uintah to achieve excellent scalability for a challenging fluid-structure problem with mesh refinement on as many as 260K cores.

Keywords: uintah, csafe

Q. Meng, A. Humphrey, M. Berzins.

“The Uintah Framework: A Unified Heterogeneous Task Scheduling and Runtime System,” In Digital Proceedings of The International Conference for High Performance Computing, Networking, Storage and Analysis, Note: SC’12 –2nd International Workshop on Domain-Specific Languages and High-Level Frameworks for High Performance Computing, WOLFHPC 2012, pp. 2441--2448. 2012.

DOI: 10.1109/SCC.2012.6674233

The development of a new unified, multi-threaded runtime system for the execution of asynchronous tasks on heterogeneous systems is described in this work. These asynchronous tasks arise from the Uintah framework, which was developed to provide an environment for solving a broad class of fluid-structure interaction problems on structured adaptive grids. Uintah has a clear separation between its MPI-free user-coded tasks and its runtime system that ensures these tasks execute efficiently. This separation also allows for complete isolation of the application developer from the complexities involved with the parallelism Uintah provides. While we have designed scalable runtime systems for large CPU core counts, the emergence of heterogeneous systems, with additional on-node accelerators and co-processors presents additional design challenges in terms of effectively utilizing all computational resources on-node and managing multiple levels of parallelism. Our work addresses these challenges for Uintah by the development of new hybrid runtime system and Unified multi-threaded MPI task scheduler, enabling Uintah to fully exploit current and emerging architectures with support for asynchronous, out-of-order scheduling of both CPU and GPU computational tasks. This design coupled with an approach that uses MPI to communicate between nodes, a shared memory model on-node and the use of novel lock-free data structures, has made it possible for Uintah to achieve excellent scalability for challenging fluid-structure problems using adaptive mesh refinement on as many as 256K cores on the DoE Jaguar XK6 system. This design has also demonstrated an ability to run capability jobs on the heterogeneous systems, Keeneland and TitanDev. In this work, the evolution of Uintah and its runtime system is examined in the context of our new Unified multi-threaded scheduler design. The performance of the Unified scheduler is also tested against previous Uintah scheduler and runtime designs over a range of processor core and GPU counts.

P.K. Notz, R. Pawlowski, J. Sutherland.

“Graph-Based Software Design for Managing Complexity and Enabling Concurrency in Multiphysics PDE Software,” In ACM Transactions on Mathematical Software, Vol. 39, No. 1, November, 2012.

DOI: 10.1145/2382585.2382586

Multiphysics simulation software is plagued by complexity stemming from nonlinearly coupled systems of Partial Differential Equations (PDEs). Such software typically supports many models, which may require different transport equations, constitutive laws, and equations of state. Strong coupling and a multiplicity of models leads to complex algorithms (i.e., the properly ordered sequence of steps to assemble a discretized set of coupled PDEs) and rigid software.

This work presents a design strategy that shifts focus away from high-level algorithmic concerns to low- level data dependencies. Mathematical expressions are represented as software objects that directly expose data dependencies. The entire system of expressions forms a directed acyclic graph and the high-level as- sembly algorithm is generated automatically through standard graph algorithms. This approach makes problems with complex dependencies entirely tractable, and removes virtually all logic from the algorithm itself. Changes are highly localized, allowing developers to implement models without detailed understand- ing of any algorithms (i.e., the overall assembly process). Furthermore, this approach complements existing MPI-based frameworks and can be implemented within them easily.

Finally, this approach enables algorithmic parallelization via threads. By exposing dependencies in the algorithm explicitly, thread-based parallelism is implemented through algorithm decomposition, providing a basis for exploiting parallelism independent from domain decomposition approaches.

J.R. Peterson, J.C. Beckvermit, T. Harman, M. Berzins, C.A. Wight.

“Multiscale Modeling of High Explosives for Transportation Accidents,” In Proceedings of the 1st Conference of the Extreme Science and Engineering Discovery Environment: Bridging from the eXtreme to the campus and beyond, 2012.

DOI: 10.1145/2335755.2335828

The development of a reaction model to simulate the accidental detonation of a large array of seismic boosters in a semi-truck subject to fire is considered. To test this model large scale simulations of explosions and detonations were performed by leveraging the massively parallel capabilities of the Uintah Computational Framework and the XSEDE computational resources. Computed stress profiles in bulk-scale explosive materials were validated using compaction simulations of hundred micron scale particles and found to compare favorably with experimental data. A validation study of reaction models for deflagration and detonation showed that computational grid cell sizes up to 10 mm could be used without loss of fidelity. The Uintah Computational Framework shows linear scaling up to 180K cores which combined with coarse resolution and validated models will now enable simulations of semi-truck scale transportation accidents for the first time.

J. Van Rij, T. Harman, T. Ameel.

“Slip Flow Fluid-Structure-Interaction,” In International Journal of Thermal Sciences, Vol. 58, pp. 9--19. August, 2012.

DOI: 10.1016/j.ijthermalsci.2012.03.001

While many microscale systems are subject to both rarefaction and fluid-structure-interaction (FSI) effects, most commercial algorithms cannot model both, if either, of these for general applications. This study modifies the momentum and thermal energy exchange models of an existing, continuum based, multifield, compressible, unsteady, Eulerian-Lagrangian FSI algorithm, such that the equivalent of first-order slip velocity and temperature jump boundary conditions are achieved at fluid-solid surfaces, which may move with time. Following the development and implementation of the slip flow momentum and energy exchange models, several basic configurations are considered and compared to established data to verify the resulting algorithm's capabilities.

Page 3 of 14